Lagging Indicators, Leading Indicators … Let’s Start Over

What do indicators really mean? Occupational safety and health (OSH) professionals continue to debate this issue. Can indicators really measure performance of an OSH program? On one side are lagging indicators, which include common markers such as total recordable incident rate (TRIR); days away, restricted and transfer rate; and experience modification rate. These are lagging indicators because they measure after-the-fact occurrences. Data measured after the fact may be a lens into an OSH program’s failings and offer opportunities for improvement. For many years, organizations and OSH professionals have believed that these indicators tell the whole story – that low lagging indicators mean the OSH program is effective and employees are working safely, and high ones mean the opposite, leading to a heavy focus on decreasing the numbers or striving for zero.

But multiple studies reveal that there is no connection between low incident rates and a safe workplace. Organizations with long track records of no OSHA recordable injuries often experience multiple fatal incidents or catastrophes (e.g., BP’s Deepwater Horizon explosion). Rates can be the result of luck, poor reporting, the randomness of incidents and many other factors.

On the other side are leading indicators, which are thought to be superior because they focus on incident prevention. Unfortunately, there isn’t a list of commonly accepted leading indicators for a couple of reasons. First, the concept is newer to OSH professionals, and while many say they appreciate the perceived value, some confess to not really knowing how to create leading indicators that are meaningful to their organization. The second reason is that, in my experience and review of the literature, many leading indicators don’t really indicate anything; they are simply tallies of activities thought to have some preventive function. The number of training hours completed in a quarter or the number of safety committee meetings held in a year may be important data points to track and can be helpful in overall OSH performance. However, they do not provide any indication that the OSH program improved as a result because they are missing a quality component or a tie to successful prevention of something.

So, where does this leave us? Which one is better? Should we get rid of lagging indicators in favor of leading ones? There are no easy answers, but a further dive into the current situation may offer some solutions.

The End of Lagging Indicators?

The practice of a profession, like OSH, is not static. It evolves over time as new information and approaches are tried and provide a better way. It seems appropriate to view the current evolution in thinking about indicators as an example of that, which may not mean the end of the value of lagging indicators, but a better understanding of their use.

Lagging indicators can be useful because they are concrete numbers, and the methods used to calculate them are widely understood. They allow an organization to benchmark itself against others or make year-over-year internal comparisons. However, digging deeper into the incidents behind the rate provides more valuable insight, such as common injury causes, processes and work groups. Rather than focusing on reducing the aggregate number, a better strategy might be to develop processes to target a subgroup so that the organizational goal changes from reducing the overall TRIR to reducing a subset of injuries.

It should also be noted that choosing not to use lagging indicators may not be possible. The OSHA recordkeeping standard, which requires tracking and reporting of injuries to the U.S. Bureau of Labor Statistics, is mandatory for most privately run organizations. In addition, lagging indicators are often required to be reported when organizations undergo the contracting process or for an organizational review completed for various reasons by external parties.

Are Leading Indicators Better?

The National Safety Council’s Campbell Institute began to explore the issue of leading indicators in 2013 by using an expert panel from the institute’s membership and conducting surveys. Since then, five white papers have been published. The first, “Transforming EHS Performance Measurement Through Leading Indicators,” reported that 94% of respondents agreed that the use of leading indicators was an important factor in measuring OSH performance, and 93% responded that their organizations would be increasing the use of leading indicators in the next five years. However, five years later, Environmental Resources Management’s Global Safety Survey reported that “few companies are using meaningful leading indicators to evaluate the efficacy of their safety processes and programs”; 70% of respondents use lagging indicators and only 26% use any form of a leading indicator. If leading indicators are better measures of OSH performance, why is their use so limited? As with most enigmas, there is no one true answer.

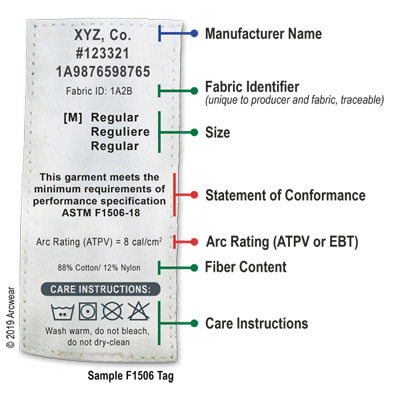

For organizations with less mature OSH programs or those that are not far along in their development of indicators beyond basic incident rates, it can be enticing to replace a lagging indicator with a tally and call it leading. Data that can be used is easy to collect and is probably already being collected. But this strategy fails to correctly educate the organization, particularly senior leaders, on leading OSH indicators. Incorrectly claiming a tally is anything more than a data point creates a false sense of achievement if the associated goal is reached or vice versa. It is far better for the OSH professional to learn what makes an effective leading indicator, develop those indicators with intention and integrate them into the organization’s overall performance measurement. Some simple examples of turning a tally into a leading indicator are seen in Figure 1.

Figure 1: Transforming a Tally into a Leading Indicator

One recommended resource for those wishing to better understand leading indicator development is the last of the five Campbell Institute white papers, “An Implementation Guide to Leading Indicators,” which includes multiple examples by type (e.g., hazard reports, use of PPE, safety suggestions). In addition, a rubric to develop customized indicators based on two factors – the level of organizational maturity and levels of complexity – is also provided.

Another recommended resource is one from OSHA titled “Using Leading Indicators to Improve Safety and Health Outcomes.” A word of caution, though, about both resources: not all of the examples provided have a quality component or tie to successful reduction of an OSH hazard. Many are simply tallies and would need to be modified to demonstrate improvement.

Lastly, it is important to reiterate that the use of tallies shouldn’t be completely dismissed. In tracking performance, multiple data points should be used. Establishing a goal of 5,000 training hours in a given year or achieving 95% attendance at safety committee meetings can be important, but caution is advised against suggesting that they indicate anything.

Moving Forward

As noted earlier, the solution is not to simply stop using lagging indicators in favor of leading ones, although I will confess to having promoted that for many years. Carsten Busch reminds us in the book “If You Can’t Measure It … Maybe You Shouldn’t” that it isn’t either/or: “As is often in safety, there is not a binary choice of one or the other – it is very much a case of both and each in the right application and context.”

One approach is to balance the use of indicators. The simplest method for accomplishing this is to ensure that every lagging indicator is balanced with a leading one. Though not yet available, a revision to ANSI/ASSP Z16-1995, “Information Management for Safety and Health,” will advocate this balanced scorecard approach. ANSI/ASSP Z16 is a voluntary consensus standard originally published in the 1930s and last updated in 1995. Summer 2021 is the expected publication date of the revised edition.

Some subject matter experts advocate the concept of indicators being part of a process model or systems view. In this way, actions and activities (leading indicators) are tied to processes focused on OSH improvement that provide results (lagging indicators). See Figure 2. In his June 2019 article in Professional Safety titled “Measuring Up: Evaluating Effectiveness Rather Than Results,” Peter T. Susca also advocates making sure the results are connected to a business strategy rather than a separate goal related to OSH performance. An example of this concept is found in the sidebar to this article.

Figure 2: Tying Indicators to Organizational Process Improvement

Finally, OSH professionals would be well-served by taking a closer look at how indicators are viewed, tracked and reported on in their organizations. It is not uncommon to see a safety dashboard and a separate dashboard for other organizational indicators related to finance, market share, revenue generation and so forth. Methods that show all indicators as business indicators help to promote integration.

Conclusion

The way in which the success of an OSH program is measured needs to evolve away from sole reliance on lagging indicators and toward a more balanced approach with true leading indicators. OSH professionals should take a closer look at what their organizations are doing, understand the new methodologies and approaches, and integrate them for the purpose of continuous improvement.

About the Author: Pam Walaski, CSP, is senior program director for Specialty Technical Consultants Inc. (www.specialtytechnicalconsultants.com), a specialized management consulting firm that helps its clients enhance environmental, health and safety performance. She also serves as an adjunct faculty member for the Indiana University of Pennsylvania Safety Sciences Program. Walaski is a professional member of the American Society of Safety Professionals and is currently serving a three-year term as a director at large.

*****

Tying OSH Indicators to an Organization’s Strategic Plan

The Situation: An engineering firm provides environmental field services to its clients that include wetland delineations, habitat and species surveys, pipeline surveys and archaeological surveys. This service enjoys high client satisfaction and is targeted for a 25% increase in gross revenue by the business unit director in next year’s strategic plan. The organization’s TRIR is above the industry average as well as the benchmark set by some current clients and potential new ones. The TRIR may impact the business unit’s ability to reach its goal.

Hundreds of field staff are involved in these projects, working in all types of weather with challenging terrain and physical demands. Numerous minor injuries (e.g., twisted ankles/knees, bumps on the head) typically occur that are not emergencies but cause enough discomfort that field staff consider a visit to a medical professional. Having no medical resource to assess the injury within the organization, an employee errs on the side of caution and visits a local urgent care center, and their minor injury ends up as an OSHA recordable. Because of the remote locations of the projects and the distance from home offices, collaboration with a medical provider is not possible. The firm’s OSH Department believes some of these minor injuries could be treated with self-care, such as ice, elevation, over-the-counter pain relievers and rest. To support the business unit’s goal, the OSH Department decides to contract with an external medical triage services vendor to provide the missing medical expertise.

Leading Indicators

- Three vendors will be vetted based upon established criteria: available 24/7, phones answered by a nurse, not affiliated with a workers’ compensation carrier. The indicator will be measured by successful completion of the vetting and contracting process within a specified time frame.

- Written protocols will be developed with the vendor. The indicator will be measured by successful completion within a specified time frame.

- Training on the protocols will be developed and delivered. Other methods of communicating the protocols will include wallet cards for field staff and posters for offices. The indicator will be measured by successful completion of the training and communication delivery within a specified time frame.

- Successful retention of the protocols will be measured at 30 days and 60 days post-rollout with a 95% retention rate. Staff who do not follow the protocols will be contacted to understand the reasons; results will be used for protocol revisions.

Lagging Indicators

- Staff satisfaction with the vendor is expected to correlate with a willingness to use them again and/or convey their satisfaction to colleagues, encouraging them to use the vendor as well. Contact within 48 hours will include a series of five questions. A positive answer to four out of the five questions for all users is the indicator.

- A tracking report will be disseminated quarterly and include the total number of each type of incident when the triage service was used and a 12-month rolling incident rate. It will also highlight incidents when use of the medical triage service resulted in a recommendation for self-care that was successful as reported by the staff person.

- The number of minor soft tissue injuries diverted from medical care to self-care will increase by at least 25% in the first year and by 50% after 18 months. Subsequently, the TRIR will decrease, but the organization declined to place a reduction number on the aggregate TRIR.

Successful achievement of the indicators noted above will not only reduce the number of injuries for which medical care is sought, but the subsequent reduction in incident rates will also support the business unit’s achievement of its increased sales goal.